Graphics CODEx

A collection of (graphics) programming reference documents

Async Pipelines

Overview

When multiple commands are submitted to the GPU, we can consider them all to execute in sequence1. However, some pipelines, such as copy and compute are able to run off of their own async GPU queues, allowing them to overlap work with the other work submitted, at the cost of requiring manual synchronisation between the different queues.

Example

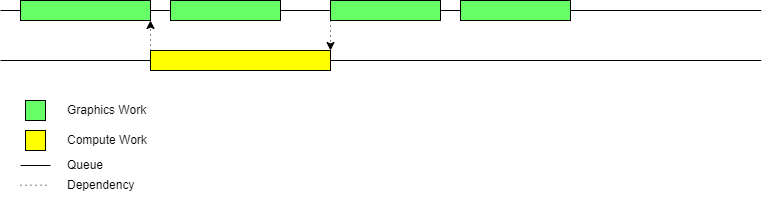

This is an example of a set of commands, where we have a compute task that is dependent on an earlier graphics task with a later graphics task dependent on the compute task.

As you can see, before the compute task, we have another graphics task that is unrelated to the dependency chain displayed in the image. By moving the compute task on a separate queue, as illustrated below, we can overlap the unrelated graphics task with the compute task, which results in a shorter frame time. Notice however that due to the 3rd graphics task having a dependency on the compute task, the main queue will stall due to having to wait on the compute task on the async queue to finish.

Resources

Async queues are very powerful, but need to be used carefully. Unlike the idea of multi-threading, this async work does not execute on separate hardware but executes on the exact same hardware as the primary queue(s). As a result, if too much work is scheduled on the async queue, the overall frame time can actually increase due to having to run at a slower speed due to having to share resources2.

-

In practice, the driver ensures this for you in older APIs (e.g. OpenGL), while in more modern APIs (e.g. Vulkan) this is the responsibility of the programmer through pipeline and memory barriers. ↩

-

In practice, certain types of GPU work use different parts of the hardware, so care can be taken to only overlap sets of work that use different parts of the hardware. ↩

Last modified on Monday 31 January 2022 at 16:05:11