Graphics CODEx

A collection of (graphics) programming reference documents

Compute Pipeline

Before reading this chapter, please read the Threading Model chapter first.

Overview

With GPU’s becoming more and more powerful every year, graphics programmers quickly started utilising the GPU more heavily and coming up with all sorts of techniques to make full use of it’s computational power. However, because only the graphics pipeline was available, all of these techniques had to adapt their data to be faked as geometric or texture data. IHV’s and graphics API developers realised that this could be done better and as a result, introduced the new compute pipeline.

The whole idea behind it is that it does nothing for you. It is a pipeline with a single programmable function stage with no hardware input or output components. All data has to be manually read and written by the compute shader.

Texture2D< float4 > g_inputTexture;

RWTexture2D< float2 > g_outputTexture;

static const float3 c_rgbToLuminance = float3

(

0.212639f,

0.715169f,

0.072192f,

);

[numthreads(16, 16, 1)]

void main

(

uint3 DispatchThreadID : SV_DispatchThreadID

)

{

const float4 colour = g_inputTexture[ DispatchThreadID.xy ];

const float averageLuminance = dot( colour.rgb, c_rgbToLuminance );

g_outputTexture[ DispatchThreadID.xy ] = float4( averageLuminance, colour.a );

}

Thread

At the lowest level, a compute shader consists out of multiple threads. Unlike vertex and pixel shaders, a single thread doesn’t have a predefined meaning. It is up to the shader writer to decide what a thread is supposed to do, even with possibilities of switching it mid-shader as it’s only a logical construct, not an actual predefined concept. This flexibility is largely what makes compute shaders so powerful.

In the example, one thread is responsible for reading a single pixel from g_inputTexture, calculating the average luminance and writing out

the newly calculated value for the single pixel to the g_outputTexture.

Thread Group

A thread group is a 3-dimensional grid of threads that execute together. The size of the thread group is defined at compile time, for example

with the numthreads instruction in HLSL as shown in the example defining a thread group size of 16x16x1, resulting in a total of 256 threads

per thread group.

The maximum amount of threads per dimension as well as total threads per group is usually defined by the graphics API. For example, DirectX

cs_5_0 limits the X and Y to 1024 threads and Z to 64, with the total maximum per group limited to 1024 threads.

Hardware Mapping

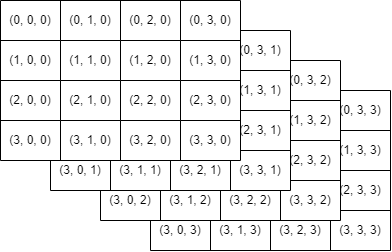

Thread groups are flattened into a list of threads in a z-ordering style:

uint threadID = thread3DIndex.z * ( c_threadCountX * c_threadCountY )

+ thread3DIndex.y * ( c_threadCountX )

+ thread3DIndex.x;

As explained in the Threading Model chapter, threads are grouped into fixed size blocks called waves. The sizes for a wave are predefined by the hardware, while the size of a thread group is chosen by the shader writer. As a result, the thread groups are split into waves. All waves that originate from the same thread group are scheduled on the same compute unit in order to have access to shared resources.

Group-shared memory

Every thread within a thread group has access to a small block of memory that is only accessible by threads within the same thread group. This is called group-shared memory, but you will also often see it referred to as LDS. This memory block is quite small, often being limited in size by the graphics API (e.g. DirectX limits the usage to a maximum of 32KiB) and is part of the compute unit. However, it is incredibly fast, which opens up a lot of doors for shader optimisation possibilities, such as caching texture fetches to avoid each thread having to re-sample a texture.

However, due to group-shared memory being a limited resource per compute unit, using too much can result in reduced occupancy, resulting in a direct loss of performance. For example, GCN compute units have 64KiB of LDS, meaning that if you use the full 32KiB, you will have a maximum occupancy of 2 as opposed to the theoretical maximum of 10.

Dispatch

We now have groups of threads that can execute together in pre-defined sizes decided at compile time. However, these threads still need to be kicked off.

Where a draw command is the core command for the graphics pipeline (e.g. DrawIndexedInstanced), the core command for the compute pipeline is the Dispatch

command.

This command takes three arguments, X, Y and Z, which defines a 3-dimensional grid of thread groups to be kicked off, similar to how a thread group

is a 3-dimensional group of threads. As this command is a CPU-side command, the amount of thread groups that can be kicked off is limited by the graphics API.

In our example, we have a thread group size of 16x16x1, with one thread being responsible for one pixel. If we want to execute for every pixel of our texture, the amount of thread groups we need to kick off is dependent on our texture resolution:

// As defined by our example [numthreads]

static const uint c_groupThreadCountX = 16;

static const uint c_groupThreadCountY = 16;

static const uint c_groupThreadCountZ = 1;

const uint dispatchCountX = ( ( inputTexture.GetWidth() + c_groupThreadCountX - 1 ) / c_groupThreadCountX );

const uint dispatchCountY = ( ( inputTexture.GetHeight() + c_groupThreadCountY - 1 ) / c_groupThreadCountY );

const uint dispatchCountZ = 1u;

// Kick off our compute shader

commandList.Dispatch( dispatchCountX, dispatchCountY, dispatchCountZ );

The

+ c_groupThreadCountX - 1is to ensure that we do an aligned divide. for instance ifinputTexture.GetWidth()was smaller thanc_groupThreadCountX, we still want to kick off at least one thread group.

If we have inputTexture be a size of 960x720, we would kick off 60x45x1 dispatches, resulting in a total thread count of 691’000 threads (16 * 16 * 60 * 45).

Coherency

Threads that belong to the same wave have implicit coherency, due to executing in lock-step. However, threads from the same thread group that have

been split into separate waves are not coherent. As a result, certain operations require manual synchronisation through the use of memory

and instruction barriers (e.g. HLSL GroupMemoryBarrierWithGroupSync()).

An example of this is when multiple threads pre-sample values of a texture and store them in group-shared memory. Before this write is visible to all other threads across waves, an instruction barrier needs to be issued to ensure that all threads have issued the write and a group-shared memory barrier needs to be issued to ensure that all writes from all threads have completed.

static const uint c_threadCountX = 16;

static const uint c_threadCountY = 16;

static const uint c_threadCountZ = 1;

Texture2D< float > g_inputTexture;

Texture2D< float > g_outputTexture;

groupshared float gs_values[ c_threadCountX * c_threadCountY * c_threadCountZ ];

[numthreads(c_threadCountX, c_threadCountY, c_threadCountZ)]

void main

(

uint3 DispatchThreadID : SV_DispatchThreadID,

uint3 GroupThreadID : SV_GroupThreadID

)

{

// Read a value from our input texture

float value = g_inputTexture[ DispatchThreadID.xy ];

// Calculate the flat index of our thread within our thread group

uint localIndex = ( GroupThreadID.z * c_threadCountX * c_threadCountY )

+ ( GroupThreadID.y * c_threadCountX )

+ GroupThreadID.x;

// Write our value into group-shared memory

gs_values[ localIndex ] = value;

// Ensure that each thread has issued the write and the writes have completed

// Note that this only synchronises waves that belong to the same thread-group

GroupMemoryBarrierWithGroupSync();

// Do some kind of operation on the values in group-shared memory

// If the previous barrier wasn't present, this operation would read stale memory

// as the writes may not have been completed yet, or worse, not even issued yet

float newValue = ReadValuesFromGroupShared();

// Write our newly calculated value to our output texture

g_outputTexture[ DispatchThreadID.xy ] = newValue;

}

Last modified on Wednesday 09 March 2022 at 20:35:22